Posts posted by C-Man

-

-

-

-

-

This show has completely escaped me until the past few weeks. HBO has been running the past seasons in advance of S4's premiere on Sunday. I thought it was a dead show and they were showing marathons during the holidays like they did with Veep and other HBO properties. Saw about 5 mins of a scene and changed the channel. Then Marisa Abela pops up on Seth Meyers last night and ...

On 9/2/2022 at 11:39 PM, LonghornSean said: Only through three episodes in the new season but I dig it, despite most of the financial maneuvering being mumbo jumbo to me.

The musical score is very cool. Gives it a unique and tense vibe. And Yasmin. Got damn.

Also I think I probably wouldn’t like it as much if it was the same show but in New York.

On 9/7/2022 at 10:19 AM, yoladu said: I pretty much watch it for this.

On 9/10/2022 at 9:27 PM, Frank The Tank said: Yasmine can fucking get it.

... quick Google search and yes, yes and absolutely yes. She's gorgeous.

That's all I have for now. Adding this to the list of things I need to check out at some point.

-

-

Oil company execs not climbing all over themselves to set up shop in Venezuela. Hmm, can't imagine why.

https://www.cnn.com/2026/01/09/business/oil-executives-trump-meeting-venezuela

-

Edited by C-Man

On 3/27/2020 at 1:17 PM, Jerry Callo said: Just got some sourdough starter from a neighbor. With some flour and about 15 hours of labor, we should have some sourdough bread by Sunday.

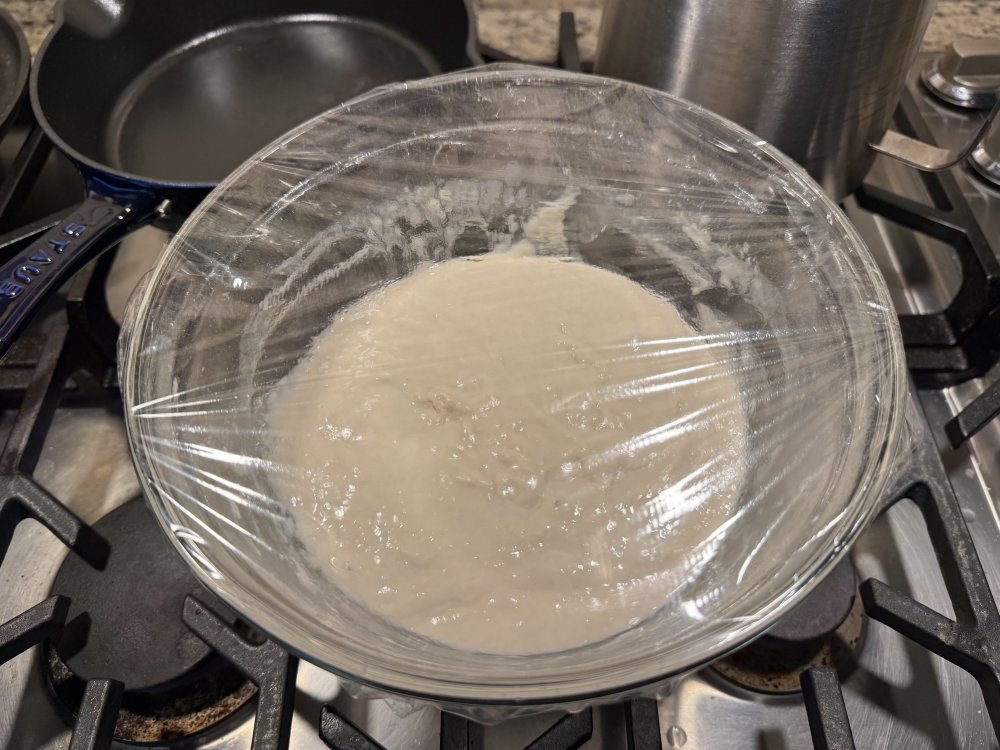

So I've had an envelope of sourdough starter in my desk drawer for several years from Kensington in Toronto. Supposedly, this stuff hails back to 1850's era San Francisco or whatever. Probably marketing. Anyway, my one-time BBQ cook buddy who dabbles in all kinds of cooking/smoking/baking has used his Kensington sourdough starter to make some killer looking loaves. I've put it off for multiple years but finally bit the bullet and started cultivating my starter one week ago today. And I've got a few questions. On Day 3 (I think), I fucked up and used Dallas tap water -- about 1/4 cup of it. I know chlorine is not good for sourdough starter and the process but I was hoping I didn't murder it. It bubbled quite good and looked fine and dandy until I threw half of it in the trash on Day 4 (or 5) as instructed. It's seemed a little dormant since then. Here's how it looks today, on Day 7 when the instructions say I should have a fully-developed sourdough starter.

(Hope you can see this. If not, I'll need to try and add from my phone.)

PS -- this stuff kind of stinks. Guess that's where the "sour" in sourdough comes from. Tell me that's normal before I poison my family with bad sourdough -- if this shit ultimately reacts the way it's seemingly supposed to.)

-

-

1 hour ago, Ghost of NMAS said: Maybe someone should join ICE just so they can shoot Vance with absolute immunity

7 minutes ago, Brisketexan said: Find the hole in that logic, MAGA.

If everything ICE does is per se legal because they have absolute immunity in everything the do under color of the badge.....and a member of ICE determines that Vance is an existential threat....and said member uses deadly force to prevent Vance from harming America and Americans.....that ICE agent has absolute immunity from prosecution for his action taken under color of his badge.

That's legit unassailable logic, if you assume and accept the foundational premise: whatever an ICE agent does, he has absolute immunity.

So, which is it, JD? Do they have absolute immunity, or don't they?

But, they'd simply say it doesn't apply in this case because, well, reasons.

-

X Didn't Fix Grok's ‘Undressing’ Problem. It Just Makes People Pay for It

X is only allowing “verified” users to create images with Grok. Experts say it represents the “monetization of abuse”—and anyone can still generate images on Grok’s app and website.

https://archive.ph/uiRr9#selection-709.0-715.181

This guy's cocksuckery knows no bounds.

After creating thousands of “undressing” pictures of women and sexualized imagery of apparent minors, Elon Musk’s X has apparently limited who can generate images with Grok. However, despite the changes, the chatbot is still being used to create “undressing” sexualized images on the platform.

On Friday morning, the Grok account on X started responding to some users’ requests with a message saying that image generation and editing are “currently limited to paying subscribers.” The message also includes a link pushing people towards the social media platform’s $395 annual subscription tier. In one test of the system requesting Grok create an image of a tree, the system returned the same message.

The apparent change comes after days of growing outrage against and scrutiny of Musk’s X and xAI, the company behind the Grok chatbot. The companies face an increasing number of investigations from regulators around the world over the creation of nonconsensual explicit imagery and alleged sexual images of children. British prime minister Keir Starmer has not ruled out banning X in the country and said the actions have been “unlawful.”

Featured Video

Neither X nor xAI, the Musk-owned company behind Grok, has confirmed that it has made image generation and editing a paid-only feature. An X spokesperson acknowledged WIRED’s inquiry but did not provide comment ahead of publication. X has previously said it takes “action against illegal content on X,” including instances of child sexual abuse material. While Apple and Google have previously banned apps with similar “nudify” features, X and Grok remain available in their respective app stores. xAI did not immediately respond to WIRED's request for comment.

For more than a week, users on X have been asking the chatbot to edit images of women to remove their clothes—often asking for the image to contain a “string” or “transparent” bikini. While a public feed of images created by Grok contained far fewer results of these “undressing” images on Friday, it still created sexualized images when prompted to by X users with paid for “verified” accounts.

“We observe the same kind of prompt, we observe the same kind of outcome, just fewer than before,” Paul Bouchaud, lead researcher at Paris-based nonprofit AI Forensics, tells WIRED. “The model can continue to generate bikini [images],” they say.

A WIRED review of some Grok posts on Friday morning identified Grok generating images in response to user requests for images that “put her in latex lingerie” and “put her in a plastic bikini and cover her in donut white glaze.” The images appear behind a “content warning” box saying that adult material is displayed.

On Wednesday, WIRED revealed that Grok’s standalone website and app, which is separate from the version on X, has also been used in recent months to create highly graphic and sometimes violent sexual videos, including celebrities and other real people. Bouchaud says it is still possible to use Grok to make these videos. “I was able to generate a video with sexually explicit content without any restriction from an unverified account,” they say.

While WIRED’s test of image generation using Grok on X using a free account did not allow any images to be created, using a free account on Grok’s app and website still generated images.

The change on X could immediately limit the amount of sexually explicit and harmful material the platform is creating, experts say. But it has also been criticized as a minimal step that acts as a band-aid to the real harms caused by nonconsensual intimate imagery.

“The recent decision to restrict access to paying subscribers is not only inadequate—it represents the monetization of abuse,” Emma Pickering, head of technology-facilitated abuse at UK domestic abuse charity Refuge, said in a statement. “While limiting AI image generation to paid users may marginally reduce volume and improve traceability, the abuse has not been stopped. It has simply been placed behind a paywall, allowing X to profit from harm.”

The British government also said, according to reporting from the BBC, that the change to limit image generation to paid-only accounts is “insulting” to those who have been impacted. It said that it “simply turns an AI feature that allows the creation of unlawful images into a premium service.”

“While it may allow X to share information with law enforcement about perpetrators, it doesn’t address the fundamental issue of the model’s capabilities and alignment,” says Henry Ajder, a deepfake expert who has tracked harmful uses of the technology for years. “For the cost of a month’s membership, it seems likely I could still create the offending content using a fake name and a disposable payment method.”

“They could have removed abusive material, but they did not,” AI Forensics’ Bouchaud says. “They could have disabled Grok to generate images altogether, but they did not. They could have disabled the Grok application to generate pornographic videos.”

-

14 minutes ago, Red Five said: Vance does this really fucking annoying thing where he says "The woman was obviously trying to kill the ICE agent, and no one is arguing that". Um, 1) No she wasn't, and 2) The entire country is arguing that.

And then reporters hit him with a followup question: "How do you know this?"

Vance: "Well, we're just going to have to figure that out."

(end scene)

-

Catholic Paper Calls JD Vance a Moral Stain for ICE Victim Smear

Yahoo News

Catholic Paper Calls JD Vance a Moral Stain for ICE Victi...

A leading Catholic paper has branded JD Vance a “moral stain” and accused the vice president of having a “twisted and wrongheaded view of Christianity” for his comments on a woman killed by ICE. ReneeOn one hand, who gives a fuck what a Catholic paper thinks of the couch fucker? OTOH, I'm a believer in there's no "bad" press when it comes to shit like this. It all adds up. If this opens one idiot's eyes up to the uphauling government we have in place today, then it worked.

-

53 minutes ago, atomheartbevo said: You're over thinking it. Peter Thiel is the reason. Go look at Vance's time in Silicon Valley.

Yep. JD was a fat dork that probably got shoved in lockers in HS. His motivation is simply power and doesn't care what kind of chameleon suit he has to wear to get it.

23 minutes ago, Red Five said: Noem keeps talking about how the ICE agents were stuck in the snow. In the videos there is barely any snow on the ground. What is the purpose of this bizarre lie?

-

-

1 hour ago, Horn Under a Bad Sign said: I mean, you're watching the disintegration of the state you've known --- for bad or for good --- for 50 years and they're throwing Tucker fucking Carlson at you on TV as they're collapsing?

Is there any better indicator of the moral depths to which they've fallen?You talking about Iran or the US?

-

49 minutes ago, Chopper said: In unrelated news, pedo guy Elon Musk's ai generates pedo images because pedo guy Elon Musk likes to see pedo images on xitter

https://www.cnn.com/2026/01/08/tech/elon-musk-xai-digital-undressing

More on this front:

Musk’s Grok AI Generated Thousands of Undressed Images Per Hour on X

Elon Musk’s X has become a top site for images of people that have been non-consensually undressed by AI, according to a third-party analysis, with thousands of instances each hour over a day earlier this week.

Since late December, X users have increasingly prompted Grok, the AI chatbot tied to the social network, to alter pictures people post of themselves. During a 24-hour analysis of images the @Grok account posted to X, the chatbot generated about 6,700 every hour that were identified as sexually suggestive or nudifying, according to Genevieve Oh, a social media and deepfake researcher. The other top five websites for such content averaged 79 new AI undressing images per hour in the 24-hour period, from January 5 to January 6, Oh found.

The scale of deepfakes on X is “unprecedented,” said Carrie Goldberg, a lawyer specializing in online sex crimes. “We’ve never had a technology that’s made it so easy to generate new images,” because Grok is free and linked to a built-in distribution system, she added.

Unlike other leading chatbots, Grok doesn’t impose many limits on users or block them from generating sexualized content of real people, including minors, said Brandie Nonnecke, senior director of policy at Americans for Responsible Innovation. Other generative AI technologies, including ones from Anthropic PBC, OpenAI and Alphabet Inc.’s Google, are “giving a good-faith effort to mitigate the creation of this content in the first place,” she said. “Obviously, xAI is different. It’s more of a free-for-all.” Musk has marketed Grok as more fun and irreverent than other chatbots, taking pride in X being a place for free speech.

X did not respond to a request for comment. Rather than preventing the chatbot from creating the content in the first place, Musk has spoken about punishing the users who ask it to. “Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content,” Musk said in a reply to a post on X.

Read More: Musk Won’t Fix Grok’s Fake AI Nudes. A Ban Would

But that doesn’t leave many options for the victims. Maddie, who said she’s a 23-year-old pre-med student, woke up on New Year’s Day to an image that horrified her. On X, she had previously published a picture of herself with her boyfriend at a local bar, which two strangers altered using Grok. One asked Grok to remove her boyfriend and put her in a bikini. The next asked Grok to replace the bikini with dental floss. Bloomberg reviewed the images.

“My heart sank,” said Maddie, who requested anonymity over concerns about future job prospects. “I felt hopeless, helpless and just disgusted.”

Maddie said she and her friends reported the images to X through its moderation systems. She never received a response. When she reported a different post from one of the users who prompted Grok to make them, X said it “determined that there were no violations of the X rules in the content you reported,” according to a screenshot. The images were still up at the time of publication.

Victims targeted by deepfakes have taken to arguing with Grok in the comments of their posts. Grok often apologizes and says it will remove the images. But in many cases, the images remain live, and Grok continues to generate new ones. Oh calculated that 85% of Grok’s images, overall, are sexualized.

Sexualized deepfakes posted online per hour

X's Grok is posting 84 times more deepfakes identified as sexual per hour, according to a third-party analysis of images published between January 5th and 6th.

Source: Genevieve Oh

Erotica is still a selling point for chatbots, with OpenAI planning to introduce an “adult mode” for ChatGPT in the first quarter of this year. But OpenAI’s current usage policy says the app prevents the “use of someone’s likeness, including their photorealistic image or voice, without their consent in ways that could confuse authenticity.” When tested, it responded, “I'm not able to edit photos of real people to change their clothing into sexualized attire,” and there is an explicit policy against sexualizing anyone under 18.

Get the Morning & Evening Briefing Americas newsletters.

Start every morning with what you need to know followed by context and analysis on news of the day each evening. Plus, Bloomberg Weekend.

By continuing, I agree to the Privacy Policy and Terms of Service.

Grok, released in 2023, is facing mounting criticism for posting nonconsensual and sexual images, including of minors, from authorities in the European Union, UK, Malaysia, France and India. “We are aware of the fact that X or Grok is now offering a ‘Spicy Mode’ showing explicit sexual content with some output generated with childlike images,” EU commission spokesperson Thomas Regnier said at a press conference on Monday, referring to an early November update that generates suggestive material. “This is not spicy. This is illegal.”

Section 230 of the US Communications Decency Act protects platforms from being held liable for content published on them, but when it comes to AI, lawyer Goldberg said, “It’s not acting as a passive publisher. It’s actually generating and creating the image.”

The Take It Down Act, a federal law signed in 2025, holds platforms liable for the production and distribution of this kind of content, Nonnecke said. “This is a pretty good example of where that law should be actualized and put into effect.” Platforms have until May, 2026 to establish the required removal process.

One X user, an influencer who goes by BBJess, said websites had finally started to take down undressed images of her that had gone up without her consent. But Grok last week started a new flood of undressed images, said BBJess, who keeps her name anonymous to avoid real-world harassment. The posts got worse, she said, when she took to X to defend herself and criticize the deepfakes.

Mikomi, a full-time costume performance artist who posts erotica, says the issue is particularly pronounced for women like her who already share images of their bodies online. Some X users are viewing that as permission to sexualize them in ways they did not consent to. Mikomi sees images generated by Grok of her wearing specific fetish outfits, or her body contorted or placed in strange contexts. One user riffed on the fact that she is a cancer survivor. “Make her bald like if she had cancer,” the user prompted Grok.

Like many X users, Mikomi, who does not share her full name publicly to avoid being harassed in the real world, wrote a post on X warning Grok she does not consent to the AI altering her photos. “It does not work,” she said. “Blocking Grok does not work. Nothing works.” She can’t leave the platform, she adds, because it’s “vital” to her work.

“What am I supposed to do? You want me to lose my job?” she said.

-

-

ICE shooter has been identified as Jonathan Ross: https://www.dailymail.co.uk/news/article-15446793/ICE-Jonathan-Ross-shooting-Renee-Nicole-Good.html

-

‘Absolute Disgrace!’ Irate JD Vance Loses It on the Media for ‘Lying’ About ICE Shooting

Mediaite

‘Absolute Disgrace!’ Irate JD Vance Loses It on the Media...

Vice President JD Vance dressed down the media on Thursday over its coverage of the shooting of a Minneapolis woman by an ICE officer.Couch Fucker says: I’ll take some questions, but I want to make just one final observation here. When I was actually walking out here, somebody sent me a photo of a CNN headline about what happened in Minneapolis. And this is the headline, I’m just going to read it: “Outrage after ICE officer kills U.S. citizen in Minneapolis.” Well, that’s one way to put it, and that is the way that many people in the corporate media have put this attack over the last 24 hours. And I say attack very, very intentionally because this was an attack on federal law enforcement; this was an attack on law and order; this was an attack on the American people.

The way that the media, by and large, has reported this story has been an absolute disgrace and it puts our law enforcement officers at risk every single day! What that headline leaves out is the fact that that very ICE officer nearly had his life ended, dragged by a car six months ago, 33 stitches in his leg. So you think maybe he’s a little bit sensitive about somebody ramming him with an automobile? What that headline leaves out is that that woman was there to interfere with a legitimate law enforcement operation in the United States of America. What that headline leaves out is that woman is part of a broader left-wing network to attack, to dox, to assault, and to make it impossible for our ICE officers to do their job.

If the media wants to tell the truth, they ought to tell the truth that a group of left-wing radicals have been working tirelessly, sometimes using domestic terror techniques, to try to make impossible for the president of the United States to do what the American people elected him to do, which is enforce our immigration laws. The president stands with ICE, I stand with ICE, we stand with all of our law enforcement officers. And part of that is recognizing that you people in the media, not everybody in this room, but many people in this room have been lying about this attack. She was trying to ram this guy with her car, he shot back, he defended himself. He’s already been seriously wounded in law enforcement operations before, and everybody who’s been repeating the lie that this is some innocent woman who was out for a drive in Minneapolis when a law enforcement officer shot at her, you should be ashamed of yourselves! Every single one of you.

Fuck you to eternity, Couch Fucker.

-

-

-

-

Elon Musk Shares and Endorses Shocking X Post That Says ‘White Solidarity Is the Only Way to Survive’

Mediaite

Elon Musk Endorses Shocking Post About 'Non Whites'

Elon Musk shared and endorsed a post on X that declared that white men cannot become a "minority" and "white solidarity is the only way to survive." -

Stephen A Smith makes like $40M a year. What the fuck is he doing wading into politics? As much as I've despised him for his sports "schtick" over the years, at least politically he seemed closer to us than MAGA.

This take is beyond absurd. Stick to ball, fucko.

Stephen A. Smith says ICE agent who shot woman dead in Minnesota was 'completely justified' legally

Mail Online

Stephen A. says ICE agent who shot woman dead 'completely...

Smith added, however, that if the agent had enough time to step out the way, which he appeared to, he should have shot the tyres instead of taking aim at Good.

The GQP: Trumpist Death Cult

in Cloak Room